Job submission parameters and example script files

To submit a script use the qsub <scriptname> command

-bash-4.1$ qsub serial_job

Your job 10217 ("serial_job") has been submitted

-bash-4.1$ qstat

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

10217 0.00000 serial_job abs4 qw 06/02/2014 12:29:57 1

Job Script Directives

The default executing shell is the borne shell (/bin/sh). If you require a different shell (e.g. /bin/csh) then this can be specified in a #!/bin/csh directive at the top of the script.

Directives to the batch scheduler allow the jobs to request various resources. Directives are preceded by #$, so for instance to specify the current working directory add #$ -cwd to the script.

Option | Description | default |

-l h_rt=hh:mm:ss | The wall clock time (amount of real time needed by the job). This parameter must be specified, failure to include this parameter will result in an error message | Required |

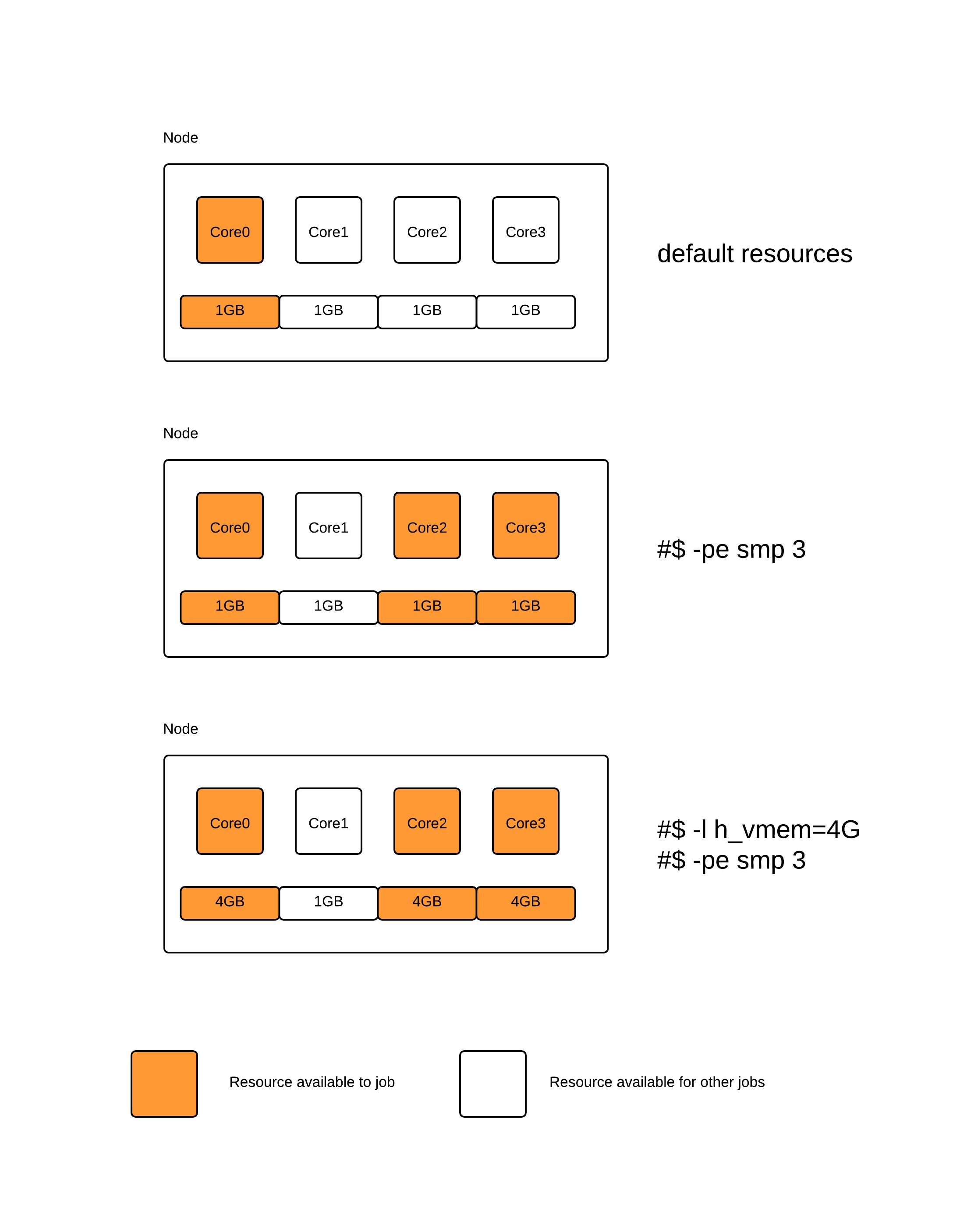

-l h_vmem=memory | Sets the limit of virtual memory required (for parallel jobs per processor). If this is not given it is assumed to be 2GB/process. If you require more memory than 1GB/process you must specify this flag. e.g. #$ -l h_vmem=12G will request 12GB memory. | 2G |

-l h_stack=memory | Sets the stacksize to memory. | unlimited |

-help | Prints a list of options | |

-l nodes=x[,ppn=y][,tpp=z] | Specifies a job for parallel programs using MPI. Assigns whole compute nodes. x is the number of nodes, y is the number of processes per node, z is the number of threads per process. | |

-l np=x[,ppn=y][,tpp=z] | Specifies a job for parallel programs using MPI. Assigns whole compute nodes. x is the number of processes, y is the number of processes per node, z is the number of threads per process. | |

-pe ib np | Specifies a job for parallel programs using MPI, np is the number of cores to be used by the parallel job. | |

-pe smp np | Specifies a job for parallel programs using !OpenMP or threads. np is the number of cores to be used by the parallel job. | |

-hold_jid prevjob | Hold the job until the previous job (prevjob) has completed - useful for chaining runs together, resuming runs from a restart file. | |

-l placement=type | Choose optimal for launching a process topology which provides fully non-blocking communication, minimising latency and maximising bandwidth. Choose good for launching a process topology which provides 2:1 blocking communications. Choose scatter for running processes anywhere on the system without topology considerations. | good |

-t start-stop | Produce an array of sub-tasks (loop) from start to stop, giving the $SGE_TASK_ID variable to identify the individual subtasks. | |

-cwd / -l nocwd | Execute the job from the current working directory; output files are sent to the directory form which the job was submitted. If -l nocwd is used, they are sent to the user's home directory. | -cwd |

-m be | Send mail at the beginning and at the end of the job to the owner. | |

-M email_address@<domain> | Specify mail address for -m option. The default <username>@york.ac.uk will automatically redirect to your email address at you parent institution, which was used for your registration on the facility. | <username>@york.ac.uk |

-V | Export all current environment variables to all spawned processes. Necessary for current module environment to be transferred to SGE shell. | Recommended |

-P project | Account usage to particular project. If user belongs to multiple projects, specifying the project name is compulsory. Can be omitted for users with single project membership | Default project for members of single project. Not specified for users with multiple projects |

| -o directory | place output from job (stdout) in directory. Directory must exist | current working directory |

| -e directory | Place error output from job (stderr) in directory Directory must exist | current working directory |

Environment Variables

In addition to those environment variables specified to be exported to the job via the -v or the -V option (see above) qsub, qsh, and qlogin add the following variables with the indicated values to the variable list (for a complete list see: man qsub)

| Variable | |

|---|---|

| SGE_O_HOME | the home directory of the submitting client. |

| SGE_O_PATH | the executable search path of the submitting client. |

| SGE_O_WORKDIR | the absolute path of the current working directory of the submitting client. |

SGE_STDERR_PATH SGE_STDOUT_PATH | the pathname of the file to which the standard error/standard output stream of the job is diverted |

| SGE_TASK_ID | the index number of the current array job task (see -t option above). This is an unique number in each array job and can be used to reference different input data records, for example. |

SGE_TASK_FIRST SGE_TASK_LAST SGE_TASK_STEPSIZE | the index number of the first/last/increment array job task |

| ENVIRONMENT | the variable is set to BATCH to identify that the job is being executed under SGE control |

JOB_ID | a unique identifier assigned by SGE |

| JOB_NAME | The jobname set by -N. |

| NSLOTS | the number of slots (normally the same as the number of cores) for a parallel job. |

| RESTARTED | this variable is set to 1 if a job was restarted either after a system crash or an interruption. |

General queue settings

There are several default settings for the batch queue system:

- The runtime must be specified otherwise jobs will be rejected. The maximum runtime of all queue is 48 hours and no default value is set.

- Unless otherwise specified, the default 1GB/process or (1GB/slot) is defined for all jobs.

Unless otherwise specified, jobs are executed from the current working directory, and output is directed to the directory from which the job was submitted. Please note this is different to standard Sun Grid Engine behaviour, which defaults to a user's home directory. The option -cwd is included in the scripts below for completeness, though it is not strictly needed.

Environmental variables, set up by modules and license settings for example, are not exported by default to the compute nodes. So, if not using the option to export variables (-V) modules will need to be loaded within the submission script.

Serial jobs

Simple serial job

To launch a simple serial job, serial_prog, to run in the current working directory (-cwd), exporting variables (-V), for 1 hour.

#$ -cwd #$ -V #$ -l h_rt=1:00:00 serial_prog

Requesting more memory

The default allocation is 2GB/slot, to request more memory use -l h_vmem option. The maximum memory per slot is a function of the number of cores and memory per node. A 16 core and 64 GB node has the maximum memory per slot of 2GB. For example to request 8GB memory

#$ -cwd -V #$ -l h_rt=1:00:00 #$ -l h_vmem=8G serial_prog

How resource allocation works

Task array

To run a large number of identical jobs, for instance for parameter sweeps, or a large number of input files, it is best to make use of task arrays. The batch system will automatically setup the variable $SGE_TASK_ID to correspond to the task number, and input and output files are indexed by the task number. For instance running tasks 1 to 10

#$ -cwd -V

#$ -l h_rt=1:00:00

#$ -t 1-10

serial_prog ${SGE_TASK_ID}

SGE_TASK_ID will take the values 1,2, ...,10

Task Array Indexing

The array stride can be a value other that 1. The following example executes four tasks, with id 1, 6, 11, 16

#$ -cwd -V

#$ -l h_rt=1:0:00

#$ -o ../logs

#$ -e ../logs

#$ -t 1-20:5

./serial_prog >> outputs/serial_task_index_job_${SGE_TASK_ID}.output

-bash-4.1$ qsub serial_task_index_job

Your job-array 10218.1-20:5 ("serial_task_index_job") has been submitted

-bash-4.1$ qstat

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

10218 0.00000 serial_tas abs4 qw 06/02/2014 14:12:04 1 1-16:5

-bash-4.1$ qstat

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

10218 0.55500 serial_tas abs4 r 06/02/2014 14:12:09 its-cluster@rnode0.york.ac.uk 1 1

10218 0.55500 serial_tas abs4 r 06/02/2014 14:12:09 its-cluster@rnode0.york.ac.uk 1 6

10218 0.55500 serial_tas abs4 r 06/02/2014 14:12:09 its-cluster@rnode0.york.ac.uk 1 11

10218 0.55500 serial_tas abs4 r 06/02/2014 14:12:09 its-cluster@rnode3.york.ac.uk 1 16

The variables SGE_TASK_FIRST, SGE_TASK_LAST, and SGE_TASK_STEPSIZE provide the task range and stride to the script.

Selecting irregular file names in array jobs

Often your data files can not be referenced via a unique numerical index - the file names will be dates or unrelated strings. This example demonstrates processing a set of files with no numerical index. We first make a file containing a list of the filenames. A short "awk" script returns the line as indexed by the numerical argument.

abs4@ecgberht$ ls idata/ Wed Nov 19 14:22:26 GMT 2014 Wed Nov 19 14:25:14 GMT 2014 Wed Nov 19 14:22:32 GMT 2014 Wed Nov 19 14:25:21 GMT 2014 Wed Nov 19 14:22:37 GMT 2014 Wed Nov 19 14:25:28 GMT 2014 Wed Nov 19 14:22:45 GMT 2014 Wed Nov 19 14:25:36 GMT 2014 Wed Nov 19 14:22:52 GMT 2014 Wed Nov 19 14:25:43 GMT 2014 Wed Nov 19 14:23:00 GMT 2014 Wed Nov 19 14:25:51 GMT 2014 Wed Nov 19 14:23:07 GMT 2014 Wed Nov 19 14:25:58 GMT 2014 Wed Nov 19 14:23:15 GMT 2014 Wed Nov 19 14:26:06 GMT 2014 Wed Nov 19 14:23:22 GMT 2014 Wed Nov 19 14:26:13 GMT 2014 Wed Nov 19 14:23:29 GMT 2014 Wed Nov 19 14:26:20 GMT 2014 Wed Nov 19 14:23:37 GMT 2014 Wed Nov 19 14:26:28 GMT 2014 Wed Nov 19 14:23:44 GMT 2014 Wed Nov 19 14:26:35 GMT 2014 Wed Nov 19 14:23:52 GMT 2014 Wed Nov 19 14:26:43 GMT 2014 Wed Nov 19 14:23:59 GMT 2014 Wed Nov 19 14:26:50 GMT 2014 Wed Nov 19 14:24:07 GMT 2014 Wed Nov 19 14:26:57 GMT 2014 Wed Nov 19 14:24:14 GMT 2014 Wed Nov 19 14:27:05 GMT 2014 Wed Nov 19 14:24:21 GMT 2014 Wed Nov 19 14:27:12 GMT 2014 Wed Nov 19 14:24:29 GMT 2014 Wed Nov 19 14:27:20 GMT 2014 Wed Nov 19 14:24:36 GMT 2014 Wed Nov 19 14:27:27 GMT 2014 Wed Nov 19 14:24:44 GMT 2014 Wed Nov 19 14:27:35 GMT 2014 Wed Nov 19 14:24:51 GMT 2014 Wed Nov 19 14:27:42 GMT 2014 Wed Nov 19 14:24:59 GMT 2014 Wed Nov 19 14:27:50 GMT 2014 Wed Nov 19 14:25:06 GMT 2014 Wed Nov 19 14:27:57 GMT 2014 abs4@ecgberht$ ls -1 idata/ > data.files abs4@ecgberht$ cat data.files Wed Nov 19 14:22:26 GMT 2014 Wed Nov 19 14:22:32 GMT 2014 Wed Nov 19 14:22:37 GMT 2014 Wed Nov 19 14:22:45 GMT 2014 Wed Nov 19 14:22:52 GMT 2014 Wed Nov 19 14:23:00 GMT 2014 Wed Nov 19 14:23:07 GMT 2014 Wed Nov 19 14:23:15 GMT 2014 Wed Nov 19 14:23:22 GMT 2014 Wed Nov 19 14:23:29 GMT 2014 Wed Nov 19 14:23:37 GMT 2014 Wed Nov 19 14:23:44 GMT 2014 Wed Nov 19 14:23:52 GMT 2014 Wed Nov 19 14:23:59 GMT 2014 Wed Nov 19 14:24:07 GMT 2014 Wed Nov 19 14:24:14 GMT 2014 Wed Nov 19 14:24:21 GMT 2014 Wed Nov 19 14:24:29 GMT 2014 Wed Nov 19 14:24:36 GMT 2014 Wed Nov 19 14:24:44 GMT 2014 Wed Nov 19 14:24:51 GMT 2014 Wed Nov 19 14:24:59 GMT 2014 Wed Nov 19 14:25:06 GMT 2014 Wed Nov 19 14:25:14 GMT 2014 Wed Nov 19 14:25:21 GMT 2014 Wed Nov 19 14:25:28 GMT 2014 Wed Nov 19 14:25:36 GMT 2014 Wed Nov 19 14:25:43 GMT 2014 Wed Nov 19 14:25:51 GMT 2014 Wed Nov 19 14:25:58 GMT 2014 Wed Nov 19 14:26:06 GMT 2014 Wed Nov 19 14:26:13 GMT 2014 Wed Nov 19 14:26:20 GMT 2014 Wed Nov 19 14:26:28 GMT 2014 Wed Nov 19 14:26:35 GMT 2014 Wed Nov 19 14:26:43 GMT 2014 Wed Nov 19 14:26:50 GMT 2014 Wed Nov 19 14:26:57 GMT 2014 Wed Nov 19 14:27:05 GMT 2014 Wed Nov 19 14:27:12 GMT 2014 Wed Nov 19 14:27:20 GMT 2014 Wed Nov 19 14:27:27 GMT 2014 Wed Nov 19 14:27:35 GMT 2014 Wed Nov 19 14:27:42 GMT 2014 Wed Nov 19 14:27:50 GMT 2014 Wed Nov 19 14:27:57 GMT 2014

abs4@ecgberht$ more analyse_irr_Data.job #$ -cwd -V #$ -l h_rt=1:15:00 #$ -o logs #$ -e logs #$ -N R_analyse_I_Data #$ -t 1-25 filename=$(awk NR==$SGE_TASK_ID data.files) Rscript analyseData.R "idata/$filename" "results/$filename.rst" abs4@ecgberht$

Dependent Arrays

The command qsub -hold_jid_add is used to hold an array job's tasks dependant on corresponding tasks. In the following example array task n of task_job2 will only run after task n of task_job1 has completed. Task n of task_job3 will only run after tasks n and n+1 of task_job2 have finished.

qsub -t 1:100 task_job1 qsub -t 1:100 -hold_jid_ad task_job1 task_job2 qsub -t 1:100:2 -hold_jid_ad task_job2 task_job3

Task Concurrency

The qsub -tc <number> option can be used to limit the number of concurrent tasks that run. You can use this so as not to dominate the cluster with your jobs.

Parallel jobs

Job to Core binding

Binding your jobs to specific cores sometimes improves the performance. Add the option "-binding linear" to the job script:

#$ -binding linear

For more information, please have a look at:

http://arc.liv.ac.uk/repos/hg/sge/doc/devel/rfe/job2core_binding_spec.txt

Shared memory

Shared memory jobs should be submitted using the -pe smp <cores> flag. The number maximum number of cores that can be requested for shared memory jobs is limited by the number of cores available in a single node, in the case of YARCC this is 16 cores. Note that if you are running OpenMP job, the OMP_NUM_THREADS environment variable is automatically set to the requested number of cores by the batch system. To run a 16 process shared memory job, for 1 hour.

#$ -cwd -V #$ -l h_rt=1:00:00 #$ -pe smp 16 openmp_code

Large shared memory

To request more than the default 2GB/process memory, use the -l h_vmem flag. For instance to request 8 cores and 3500M/core (a total of 28 GB of memory).

#$ -cwd -V #$ -l h_rt=1:00:00 #$ -l h_vmem=3500M #$ -pe smp 8 openmp_code

Using the large-memory nodes

Jobs requiring access to 256GB nodes can be submitted by requesting multiple SMP slots, and hence gain access to the total memory of the requested node. The per-slot memory requested (via the h_vmem resource) for a 20 core node will be 12.8G.

#$ -cwd -V #$ -l h_rt=1:00:00 #$ -l h_vmem=12800MB #$ -pe smp 8 serial_prog

Please note that currently the majority of YARCC is comprised of 16, 20, and 24 core nodes with between 64GB and 256GB of memory.

MPI

This MPI program was complied using the intel C compiler

-bash-4.1$ module load ics/2013_sp1.1.046

-bash-4.1$ cat openmpi_example.c

/*

mpi est program

*/

#include <mpi.h>

#include <stdio.h>

#include <unistd.h>

int main(int argc, char **argv)

{

int rank;

char hostname[256];

MPI_Init(&argc,&argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

gethostname(hostname,255);

printf("Hello world! I am process number: %d on host %s\n", rank, hostname);

MPI_Finalize();

return 0;

}

-bash-4.1$ mpicc -o openmpi_example openmpi_example.c

-bash-4.1$

Example job script for 64 processes

-bash-4.1$ cat openmpi_job

#$ -cwd -V

#$ -l h_rt=1:0:00

#$ -o ../logs

#$ -e ../logs

#$ -pe mpi 64

echo `date`: executing openmpi_example on ${NSLOTS} slots

mpirun -np $NSLOTS $SGE_O_WORKDIR/openmpi_example

Running the program. Note the "-t" option to qsub, this display the extended information about the sub-tasks of parallel jobs.

-bash-4.1$ qsub openmpi_job

Your job 432912 ("openmpi_job") has been submitted

-bash-4.1$ qstat

job-ID prior name user state submit/start at queue slots ja-task-ID

-----------------------------------------------------------------------------------------------------------------

432912 0.00000 openmpi_jo abs4 qw 09/29/2014 12:59:13 64

-bash-4.1$ qstat -t

job-ID prior name user state submit/start at queue master ja-task-ID task-ID state cpu mem io stat failed

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------

432912 0.60500 openmpi_jo abs4 r 09/29/2014 12:59:26 its-day@rnode18.york.ac.uk MASTER r 00:00:00 0.00057 0.00112

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

its-day@rnode18.york.ac.uk SLAVE

432912 0.60500 openmpi_jo abs4 r 09/29/2014 12:59:26 its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

its-long@rnode1.york.ac.uk SLAVE

432912 0.60500 openmpi_jo abs4 r 09/29/2014 12:59:26 its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

its-day@rnode19.york.ac.uk SLAVE

432912 0.60500 openmpi_jo abs4 r 09/29/2014 12:59:26 test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

test@rnode5.york.ac.uk SLAVE

-bash-4.1$

Output from the program:

-bash-4.1$ cat ../logs/openmpi_job.o432912 Mon Sep 29 12:59:26 BST 2014: executing openmpi_example on 64 slots Hello world! I am process number: 52 on host rnode19 Hello world! I am process number: 21 on host rnode1 Hello world! I am process number: 53 on host rnode19 Hello world! I am process number: 4 on host rnode18 Hello world! I am process number: 22 on host rnode1 Hello world! I am process number: 54 on host rnode19 Hello world! I am process number: 5 on host rnode18 Hello world! I am process number: 61 on host rnode5 Hello world! I am process number: 20 on host rnode1 Hello world! I am process number: 55 on host rnode19 Hello world! I am process number: 6 on host rnode18 Hello world! I am process number: 62 on host rnode5 Hello world! I am process number: 23 on host rnode1 Hello world! I am process number: 36 on host rnode19 Hello world! I am process number: 7 on host rnode18 Hello world! I am process number: 63 on host rnode5 Hello world! I am process number: 29 on host rnode1 Hello world! I am process number: 37 on host rnode19 Hello world! I am process number: 16 on host rnode18 Hello world! I am process number: 56 on host rnode5 Hello world! I am process number: 38 on host rnode19 Hello world! I am process number: 17 on host rnode18 Hello world! I am process number: 57 on host rnode5 Hello world! I am process number: 39 on host rnode19 Hello world! I am process number: 18 on host rnode18 Hello world! I am process number: 58 on host rnode5 Hello world! I am process number: 48 on host rnode19 Hello world! I am process number: 19 on host rnode18 Hello world! I am process number: 59 on host rnode5 Hello world! I am process number: 49 on host rnode19 Hello world! I am process number: 0 on host rnode18 Hello world! I am process number: 60 on host rnode5 Hello world! I am process number: 50 on host rnode19 Hello world! I am process number: 1 on host rnode18 Hello world! I am process number: 51 on host rnode19 Hello world! I am process number: 2 on host rnode18 Hello world! I am process number: 43 on host rnode19 Hello world! I am process number: 3 on host rnode18 Hello world! I am process number: 30 on host rnode1 Hello world! I am process number: 8 on host rnode18 Hello world! I am process number: 31 on host rnode1 Hello world! I am process number: 9 on host rnode18 Hello world! I am process number: 32 on host rnode1 Hello world! I am process number: 10 on host rnode18 Hello world! I am process number: 33 on host rnode1 Hello world! I am process number: 11 on host rnode18 Hello world! I am process number: 34 on host rnode1 Hello world! I am process number: 12 on host rnode18 Hello world! I am process number: 35 on host rnode1 Hello world! I am process number: 13 on host rnode18 Hello world! I am process number: 24 on host rnode1 Hello world! I am process number: 14 on host rnode18 Hello world! I am process number: 25 on host rnode1 Hello world! I am process number: 15 on host rnode18 Hello world! I am process number: 26 on host rnode1 Hello world! I am process number: 44 on host rnode19 Hello world! I am process number: 27 on host rnode1 Hello world! I am process number: 45 on host rnode19 Hello world! I am process number: 28 on host rnode1 Hello world! I am process number: 46 on host rnode19 Hello world! I am process number: 47 on host rnode19 Hello world! I am process number: 41 on host rnode19 Hello world! I am process number: 42 on host rnode19 Hello world! I am process number: 40 on host rnode19 -bash-4.1$

MPI and Node Affinity

Suppose your job requires the processes to reside on cores on the same node, ie you use the least number of nodes to run your job. This can be achieved by using the "mpi-16" and "mpi-20" parallel environments. These match the number of cores on the nodes. The number of cores you request must be multiples of 16 or 20, ie 16, 32, 48, 64..., or 20, 40, 60, 80...

#$ -cwd -V

#$ -l h_rt=0:10:00

#$ -l h_vmem=1G

#$ -o logs

#$ -e logs

#$ -N mpi-32_job

#$ -M andrew.smith@york.ac.uk

#$ -m beas

#$ -pe mpi-16 32

echo `date`: executing mpi_32 example on ${NSLOTS} slots

mpirun -np $NSLOTS $SGE_O_WORKDIR/mpi_example

Fri Oct 23 10:50:16 BST 2015: executing mpi_32 example on 32 slots Hello world from processor rnode12, rank 4 out of 32 processors Hello world from processor rnode12, rank 0 out of 32 processors Hello world from processor rnode12, rank 1 out of 32 processors Hello world from processor rnode12, rank 2 out of 32 processors Hello world from processor rnode12, rank 3 out of 32 processors Hello world from processor rnode12, rank 5 out of 32 processors Hello world from processor rnode12, rank 6 out of 32 processors Hello world from processor rnode12, rank 7 out of 32 processors Hello world from processor rnode12, rank 8 out of 32 processors Hello world from processor rnode12, rank 9 out of 32 processors Hello world from processor rnode12, rank 10 out of 32 processors Hello world from processor rnode12, rank 11 out of 32 processors Hello world from processor rnode12, rank 12 out of 32 processors Hello world from processor rnode12, rank 13 out of 32 processors Hello world from processor rnode12, rank 14 out of 32 processors Hello world from processor rnode12, rank 15 out of 32 processors Hello world from processor rnode15, rank 16 out of 32 processors Hello world from processor rnode15, rank 17 out of 32 processors Hello world from processor rnode15, rank 18 out of 32 processors Hello world from processor rnode15, rank 19 out of 32 processors Hello world from processor rnode15, rank 20 out of 32 processors Hello world from processor rnode15, rank 21 out of 32 processors Hello world from processor rnode15, rank 22 out of 32 processors Hello world from processor rnode15, rank 23 out of 32 processors Hello world from processor rnode15, rank 24 out of 32 processors Hello world from processor rnode15, rank 25 out of 32 processors Hello world from processor rnode15, rank 26 out of 32 processors Hello world from processor rnode15, rank 27 out of 32 processors Hello world from processor rnode15, rank 28 out of 32 processors Hello world from processor rnode15, rank 29 out of 32 processors Hello world from processor rnode15, rank 30 out of 32 processors Hello world from processor rnode15, rank 31 out of 32 processors

OpenMPI

For more information on using OpenMPI, please see: https://www.open-mpi.org/faq/?category=sge